Training 5 points model for OpenCV Facemark API

More recently, OpenCV introduced an API for detecting anthropometric points of the face. There is a good article on using the Facemark API at Learn OpenCV. A good implementation of the search for such points is in the dlib library, but sometimes you want to limit yourself to one library, especially when it comes to porting code to mobile devices or a browser (by the way, OpenCV supports compilation in WebAssembly). Unfortunately, face point search algorithms use models of a sufficiently large size (68 points ~ 54 MB), and the size of the downloadable code per client may be limited. The dlib library has a pre-trained model for 5 points (5.44 MB), but for OpenCV there is no such model, and there is not even support for such a model, at the moment models for 68 and 29 points are supported. The 5-point model can be used to normalize faces on the client. Below I will describe the process of learning your own model of a small size for 5 points.

Patch for OpenCV

As I said, OpenCV does not currently support 5-point models. Therefore, I had to get into the code of the FacemarkLBF module and correct this misunderstanding. We will use the implementation of the LBF method to search for points, but in OpenCV there is also an implementation of the AAM method. Below is a patch for the facemarkLBF.cpp file that adds support for the 5-point model:

diff --git a/modules/face/src/facemarkLBF.cpp b/modules/face/src/facemarkLBF.cpp

index 50192286..dc354617 100644

--- a/modules/face/src/facemarkLBF.cpp

+++ b/modules/face/src/facemarkLBF.cpp

@@ -571,7 +571,13 @@ void FacemarkLBFImpl::data_augmentation(std::vector<Mat> &imgs, std::vector<Mat>

shape.at<double>(j-1, 1) = tmp; \

} while(0)

- if (params.n_landmarks == 29) {

+ if (params.n_landmarks == 5) {

+ for (int i = N; i < (int)gt_shapes.size(); i++) {

+ SWAP(gt_shapes[i], 1, 3);

+ SWAP(gt_shapes[i], 2, 4);

+ }

+ }

+ else if (params.n_landmarks == 29) {

for (int i = N; i < (int)gt_shapes.size(); i++) {

SWAP(gt_shapes[i], 1, 2);

SWAP(gt_shapes[i], 3, 4);

The face module is in the opencv_contrib repository, and the OpenCV itself is in the opencv repository. To build, you need to download the main repository opencv and opencv_contrib and execute the following script from a separate empty subdirectory.

#!/bin/sh

cmake "../opencv" \

-DCMAKE_BUILD_TYPE=Release \

-DBUILD_opencv_face=ON \

-DOPENCV_EXTRA_MODULES_PATH="../opencv_contrib/modules"

make -j$(grep -c ^processor /proc/cpuinfo)

Preparing the Model Training Set

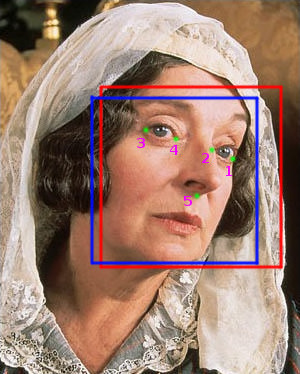

In general, the training procedure is described in the OpenCV manual. But in our case, you need to prepare a training set of photos with markup on 5 points. Fortunately, there is a corresponding training set in dlib, all that remains is to convert it to OpenCV format. The archive contains 7364 photos with the markup as in the following picture:

I wrote a script to convert labels to OpenCV format:

#!/bin/sh

parse_xml()

{

xml_file="$1"

out_dir="$2"

xmllint --xpath "//dataset/assets/images/image/@file" "$xml_file" | xargs | tr ' ' '\n' | cut -f2 -d'=' | while read f

do

echo $f

out_file="${f#*/}"

out_file="${out_file%.jpg}.pts"

out_file="${out_dir%/}/${out_file}"

echo "version: 1" >"$out_file"

echo "n_points: 5" >>"$out_file"

echo "{" >>"$out_file"

xmllint --xpath "//dataset/assets/images/image[@file='$f']/box[1]/part" "$xml_file" | sed 's/>/\n/g' | sed -E 's/.*x=\"([0-9]+)\" y=\"([0-9]+)\".*/\1 \2/g' >>"$out_file"

echo "}" >>"$out_file"

done

}

test -e ./points || mkdir ./points

parse_xml ./test_cleaned.xml ./points

parse_xml ./train_cleaned.xml ./points

( cd ./images; ls $PWD/* ) >./images_train.txt

( cd ./points; ls $PWD/* ) >./points_train.txt

The script must be placed in the dlib_faces_5points directory, the output will be the points directory with labels, as well as images_train.txt and points_train.txt files with a list of image and label files.

Training and use of the model

To train the model, a program has been prepared that must be precompiled using cmake. The model parameters are as follows:

| Parameter | Value | Description |

|---|---|---|

| n_landmarks | 5 | Number of points |

| initShape_n | 10 | Training Sample Multiplier |

| stages_n | 10 | Number of training stages |

| tree_n | 20 | Number of trees |

| tree_depth | 5 | Depth of each tree |

You can see the result in an excerpt from the video 15 Newly Discovered Facial Expressions:

The code is published in the repository on Github. Already trained model is available here, file size 3.6 MB. There is also a code for detecting points on video from a webcam, which is launched immediately after training. It should be noted that the model should be used only with the face detector, which was used in training, in this case Viola-Jones.

Comments